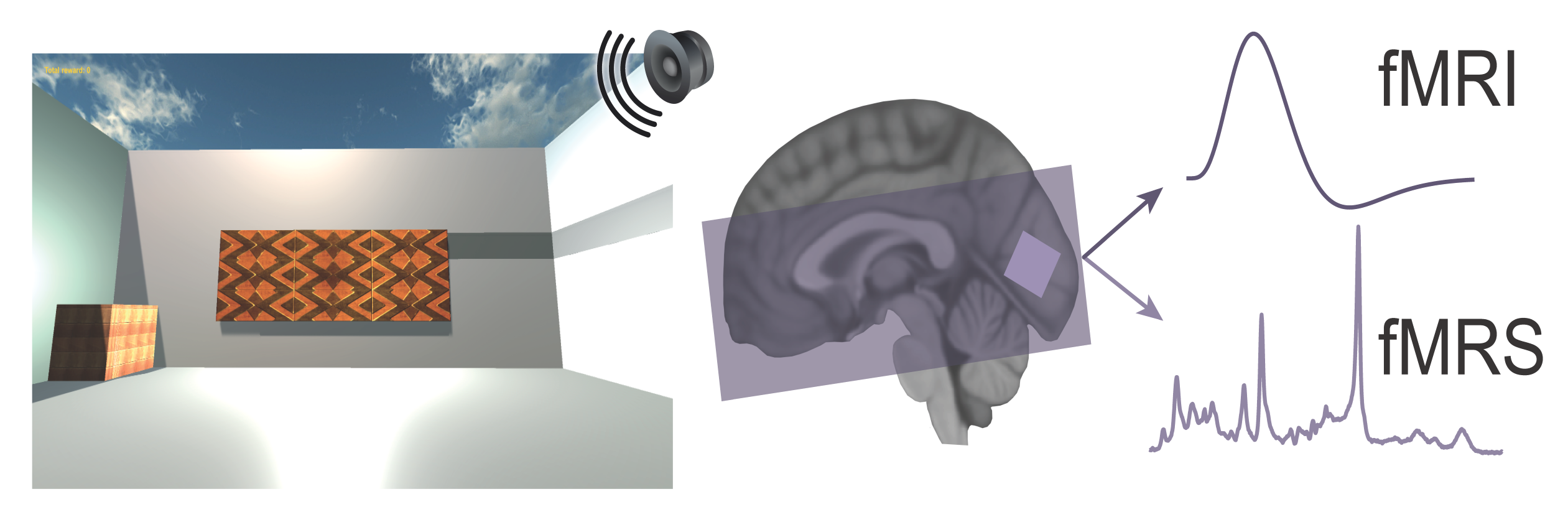

Combined fMRI-fMRS dataset in an inference task in humans

This dataset consists of the following components:

- fMRI data showing group maps for contrasts of interest (nifti)

- raw fMRS data of 19 subjects (dicom)

- preprocessed fMRS data of 19 subjects, preprocessed in MRspa (mat)

- behavioural data from inference task during MRI scan (mat)

- behavioural data from associative test post MRI scan (mat)

Participants performed a three-stage inference task across three days. On day 1 participants learned up to 80 auditory-visual associations. On day 2, each visual cue was paired with either a rewarding (set 1, monetary reward) or neutral outcome (set 2, woodchip). On day 3, auditory cues were presented in isolation (‘inference test’), without visual cues or outcomes, and we measured evidence for inference from the auditory cues to the appropriate outcome. Participants performed the inference test in an MRI scanner where fMRI-fMRS data was acquired. After the MRI scan, participants completed a surprise associative test for auditory-visual associations learned on day 1.

fMRI data:

SPM group maps in MNI space showing:

- BOLD signal on inference test trials with a contrast between auditory cues where the associated visual cue was ‘remembered’ versus ‘forgotten’

- Correlation between the contrast described in (1) and V1 fMRS measures of glu/GABA ratio for ‘remembered’ versus ‘forgotten’ trials in the inference test

- BOLD signal on inference test trials contrasted with the BOLD signal on conditioning trials, smoothed using 5mm kernel prior to second level analysis

- BOLD signal on inference test trials contrasted with the BOLD signal on conditioning trials, smoothed using 5mm kernel at the first level analysis

- BOLD signal on inference test trials contrasted with the BOLD signal on conditioning trials, smoothed using 8mm kernel at the first level analysis

- BOLD signal on inference test trials with a contrast between auditory cues where the associated visual cue was remembered’ versus ‘forgotten’, smoothed using 8mm kernel at first level

Regions of interest (ROI) in MNI space:

- Hippocampal ROI

- Parietal-occipital cortex ROI

- Brainstem ROI

- Cumulative map of MRS voxel position across participants

fMRS data:

The raw fMRS data is included in DICOM format. Preprocessed data is included as a MATLAB structure for each subject, containing the following fields:

- Arrayedmetab: preprocessed spectra

- ws: water signal

- procsteps: preprocessing information

- ntmetab: total number of spectra

- params: acquisition parameters

- TR of each acquisition

- Block length: number of spectra acquired in each block

Behavioural data from the inference task performed in the MRI scanner:

On each trial of the inference task, participants were presented with an auditory cue, before being asked if they would like to look in the wooden box (‘yes’ or ‘no’) where they had previously found the outcomes. The behavioural data from the inference test includes columns containing the following information:

- Auditory stimulus: 0 (none) for conditioning, 1-80 for inference test trials

- Visual stimulus associated with the presented auditory stimulus (1-4)

- Migrating visual stimulus (1: no 4: yes)

- Rewarded visual stimulus (0: no 1: yes)

- Set during learning (1-8)

- Video number for inference test trials (1-32)

- Video number for conditioning trials (1-16)

- Trial type: (2: conditioning, 3: inference)

- Trial start time

- Auditory stimulus/video play start time

- Inference trials: display question time, Conditioning trials: outcome presentation time

- Trial end time

- Reaction time for inference test trials

- 0: incorrect response, 1: correct response

- Wall on which visual stimulus was presented for conditioning trials

- Inter trial interval

- Button pressed (0: no, 1: yes)

Behavioural data from post MRI-scan associative test:

On each trial of the associative test, participants were presented with an auditory cue and then asked which of the 4 visual stimuli was associated with it. The columns contain the following information:

1. Auditory stimulus number (1-80, 3 repeats)

2. Visual stimulus associated with the presented auditory stimulus (1-4)

3. Migrating visual stimulus (1: no 4: yes)

4. Rewarded visual stimulus (0: no 1: yes)

5-8. Visual stimulus positions (top left/right, bottom left/right 1-4)

9-12. Wall visual stimulus is presented on (1-4)

13-16. Angle of visual stimulus still image (2-30)

17. Background image presented during auditory stimulus (2-57)

18. Chosen visual stimulus (1-4)

19. Reaction time

20. 0: incorrect response 1: correct response

21. Overall performance on presented visual stimulus

22. Overall performance on presented auditory stimulus (3 presentations)

23. Set during learning (1-8)

For a more detailed description of the scanning sequence and behavioural tasks, see the paper.

We welcome researchers wishing to reuse our data to contact the creators of datasets. If you are unfamiliar with analysing the type of data we are sharing, have questions about the acquisition methodology, need additional help understanding a file format, or are interested in collaborating with us, please get in touch via email. Our current members have email addresses on our main site. The corresponding author of an associated publication, or the first or last creator of the dataset are likely to be able to assist, but in case of uncertainty on who to contact, email Ben Micklem, Research Support Manager at the MRC BNDU.

Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0)

This is a human-readable summary of (and not a substitute for) the licence.

You are free to:

Share — copy and redistribute the material in any medium or format

Adapt — remix, transform, and build upon the material for any purpose, even commercially.

This licence is acceptable for Free Cultural Works. The licensor cannot revoke these freedoms as long as you follow the license terms. Under the following terms:

Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

ShareAlike — If you remix, transform, or build upon the material, you must distribute your contributions under the same licence as the original.

No additional restrictions — You may not apply legal terms or technological measures that legally restrict others from doing anything the licence permits.